AI has already been making its way into healthcare, but we are about to see even more drastic impact. This month, Anthropic formally expanded its Claude AI suite into healthcare and life sciences, with Claude for Healthcare. This initiative aims to move beyond generic chat assistants toward AI that interfaces with real clinical and research workflows. This brings both significant possibility as well as concern.

The platform is built to integrate with electronic health records, CMS coverage databases, ICD-10 registries, national provider identifiers, and clinical literature databases like PubMed. It is designed to be customizable, HIPAA-compliant, and to meet FHIR standards.

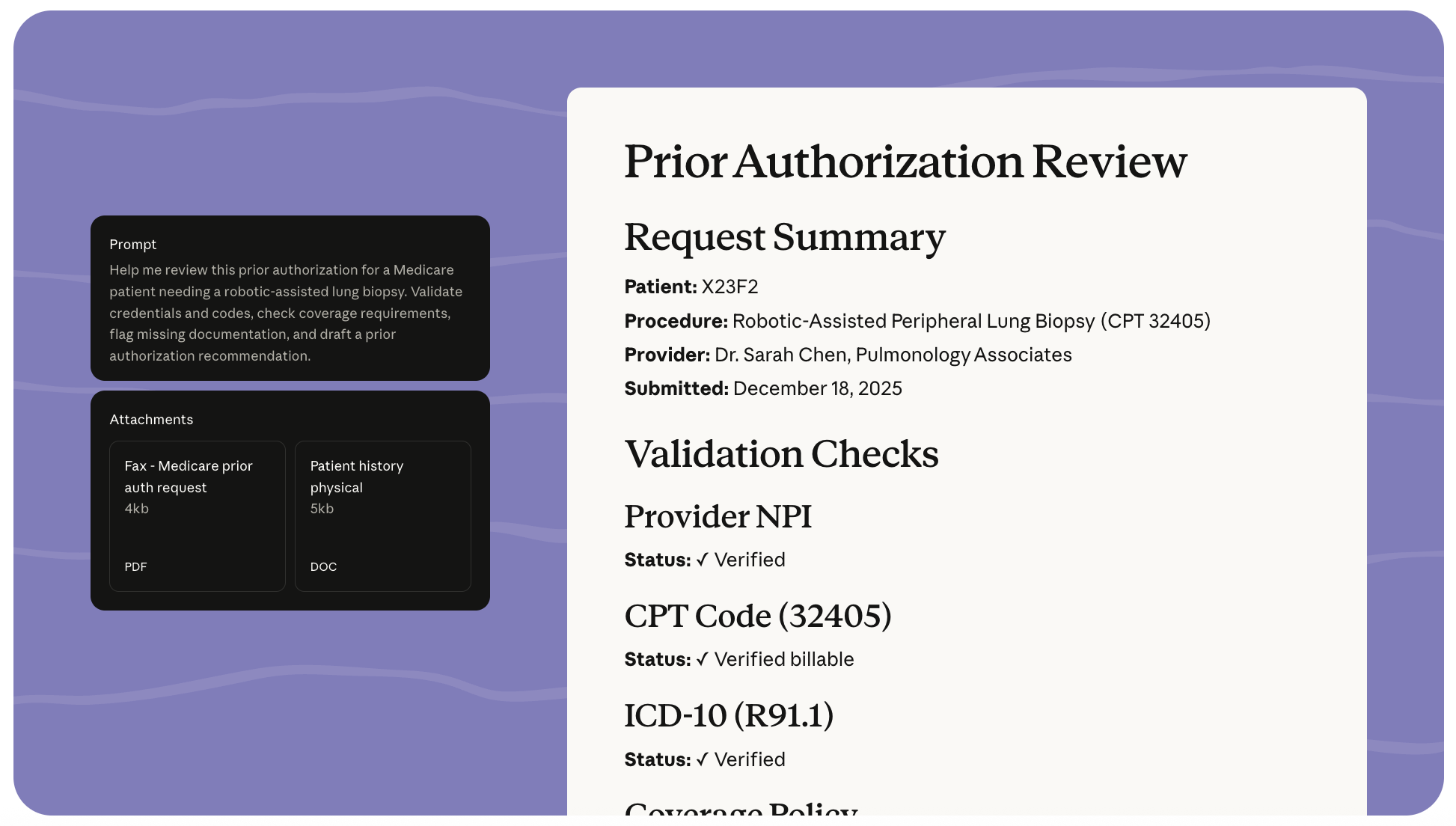

For clinical end-users and administrators, this means AI has the potential to handle traditionally time-consuming processes. Tools within Claude for Healthcare can help draft prior-authorization reviews against coverage rules, pull coding information for diagnoses and procedures, verify provider credentials, and summarize up-to-date evidence from scientific literature. In research settings, the system is purported to accelerate protocol design, enrollment tracking, and regulatory documentation by leveraging agentic “skills” linked with structured databases such as ClinicalTrials.gov.

Integration into healthcare workflows represents a broader shift in how artificial intelligence is adopted across the sector. The goal is clear: reduce administrative burden on clinicians, and improve coding and billing accuracy for the sake of both providers and patients. This mirrors a wider trend across industries where AI is positioned not just as an informational aid but as a practical operational partner.

Despite these possibilities, significant concerns remain. First, the inherent risk of inaccurate or “hallucinated” outputs from large language models means any AI-generated clinical content must be critically reviewed by qualified professionals. Safety policies explicitly stress that outputs related to diagnoses, treatment recommendations, or patient care require human validation before use. Second, while data privacy protections are designed to meet regulatory standards and Claude’s architecture reportedly keeps health data out of its training pipeline, the expansion of AI into sensitive systems raises new questions about governance, risk management, and patient trust. Finally, broader workforce implications—such as shifts in revenue cycle staffing or redefined administrative roles—warrant thoughtful planning at institutional levels.

The Anthropic-Claude for Healthcare collaboration marks an important milestone in AI integration into health systems and life sciences research. For medical professionals, it presents both an opportunity to streamline complex tasks and a reminder that responsible implementation requires rigorous oversight, validation, and governance. As these tools mature, clinician involvement in design and evaluation will be essential to ensure they enhance care without compromising safety or confidentiality.